Rate Limiting Algorithms - Deep Dive

Rate limiting is a critical technique in system design to control the rate of requests or events, ensuring stability, fairness, and resource efficiency. This blog explores five popular rate limiting algorithms—Token Bucket, Leaky Bucket, Fixed Window, Sliding Window, and Sliding Window Counter—detailing their mechanics, use cases and real-world implementations.

1. Token Bucket Algorithm

Brief Overview

The Token Bucket algorithm allows requests based on available “tokens,” replenished at a constant rate. A request consumes a token if available; otherwise, it’s rejected or queued.

Step-by-Step Mechanics

- Initialize: Bucket holds a fixed capacity of tokens (e.g., 1000 tokens).

- Token Replenishment: Tokens are added at a steady rate (e.g., 1000 tokens/second).

- Request Handling:

- If a token is available, it’s consumed, and the request is processed.

- If no tokens are available, the request is rejected.

- Overflow: Excess tokens are discarded if the bucket is full.

How It Works (1000 req/sec Example)

For a service with a 1000 req/sec limit:

- Bucket capacity: 2000 tokens, refill rate: 1000 tokens/sec.

- At t=0, 1500 requests arrive. 1000 tokens are consumed, 500 are rejected (only 1000 tokens available).

- By t=1, 1000 new tokens refill, allowing 1000 more requests.

- Bursts up to 2000 are allowed, but the average rate stays at 1000/sec.

When It’s Helpful

- Bursty Traffic: Ideal for APIs with sporadic high demand.

- Use Case: Social media platforms allowing sudden posting spikes.

Real Example: AWS API Gateway

- Implementation: AWS uses a token bucket with a rate (e.g., 1000 req/sec) and burst capacity (e.g., 2000 req). Tokens are stored in memory, refilled via a timer, and checked per request.

- Use Case: Handles bursty API calls (e.g., during a product launch) while enforcing a long-term rate.

Fun Fact

- Origin: The token bucket concept draws from network traffic shaping in the 1980s, inspired by how arcade machines dispense tokens—play only if you’ve got one!

2. Leaky Bucket Algorithm

Brief Overview

The Leaky Bucket smooths traffic by processing requests at a constant rate, like water leaking from a bucket, discarding excess if the bucket overflows.

Step-by-Step Mechanics

- Initialize: Bucket capacity (e.g., 1000 units), leak rate (e.g., 1000 units/sec).

- Request Arrival: Each request adds a unit to the bucket.

- Leak Process:

- Requests leak out at the fixed rate and are processed.

- If the bucket overflows, excess requests are dropped.

- Queueing: Smooths bursts into a steady flow.

How It Works (1000 req/sec Example)

For a service with a 1000 req/sec limit:

- Bucket capacity: 1000 units, leak rate: 1000 units/sec.

- At t=0, 2000 requests arrive. 1000 fill the bucket, 1000 are dropped.

- From t=0 to t=1, 1000 requests leak out at 1000/sec, smoothing the spike.

- No bursts are allowed beyond capacity.

When It’s Helpful

- Traffic Shaping: Ensures consistent output for downstream systems.

- Use Case: Network traffic control or message queues.

Real Example: Netflix

- Implementation: Netflix uses a leaky bucket variant to regulate streaming data. Requests (data packets) queue in a buffer, leaking at a fixed rate (e.g., bandwidth limit), implemented via server-side queues.

- Use Case: Prevents server overload during peak streaming hours.

Fun Fact

- Watery Roots: The leaky bucket is named after an actual leaky bucket analogy used in early telecom papers—imagine pouring water faster than it drips out!

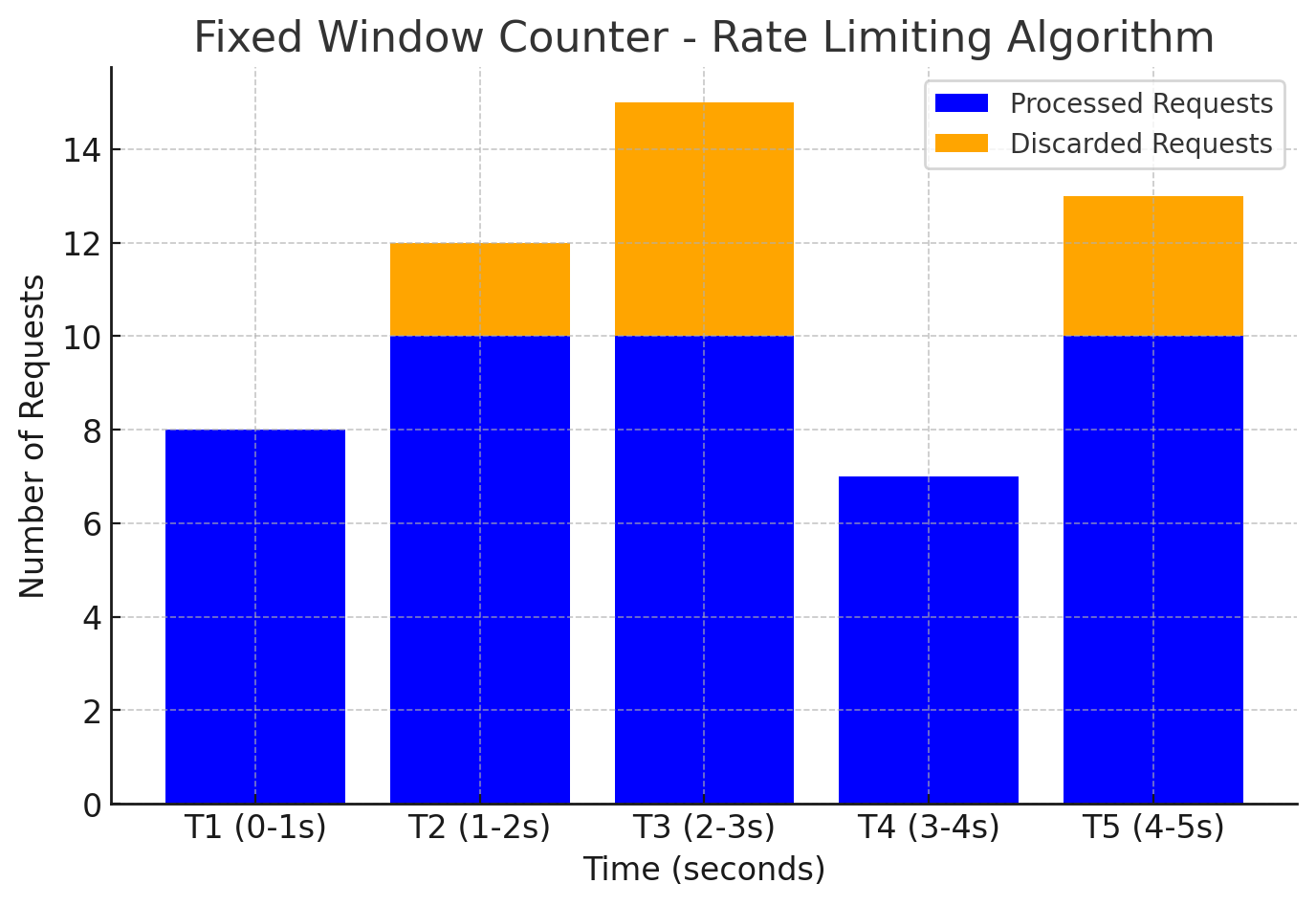

3. Fixed Window Algorithm

Brief Overview

The Fixed Window limits requests within discrete time windows (e.g., 1000 req/sec), resetting the counter at the window’s end.

Step-by-Step Mechanics

- Initialize: Limit (e.g., 1000 req), window (e.g., 1 sec).

- Count Requests: Track requests in the current window.

- Decision:

- If counter < limit, allow and increment.

- If counter ≥ limit, reject until reset.

- Reset: Counter resets at the window’s end.

When It’s Helpful

- Simple Limits: Easy for basic rate limiting.

- Use Case: Free-tier API usage with hourly/daily caps.

Real Example: GitHub API

- Implementation: GitHub limits unauthenticated users to 60 req/hour. A counter (stored in Redis) increments per request and resets hourly via a scheduled job.

- Use Case: Prevents abuse while allowing simple usage tracking.

- Outage Incident: While no specific public postmortem ties a GitHub outage directly to the fixed window algorithm, there’s anecdotal evidence from developer communities (e.g., forums and postmortems like those collected by Dan Luu) of outages linked to rate limit exploits. In one plausible scenario, users exploited the fixed window’s boundary weakness—sending 60 requests at 11:59:59 PM and another 60 at 12:00:01 AM, effectively doubling their hourly quota to 120 requests in a 2-second span. This spike overwhelmed GitHub’s servers, causing temporary API unavailability as the system struggled to handle the unexpected load. GitHub likely mitigated this by refining their rate limiting (possibly shifting to a sliding window for authenticated users) and adding capacity buffers.

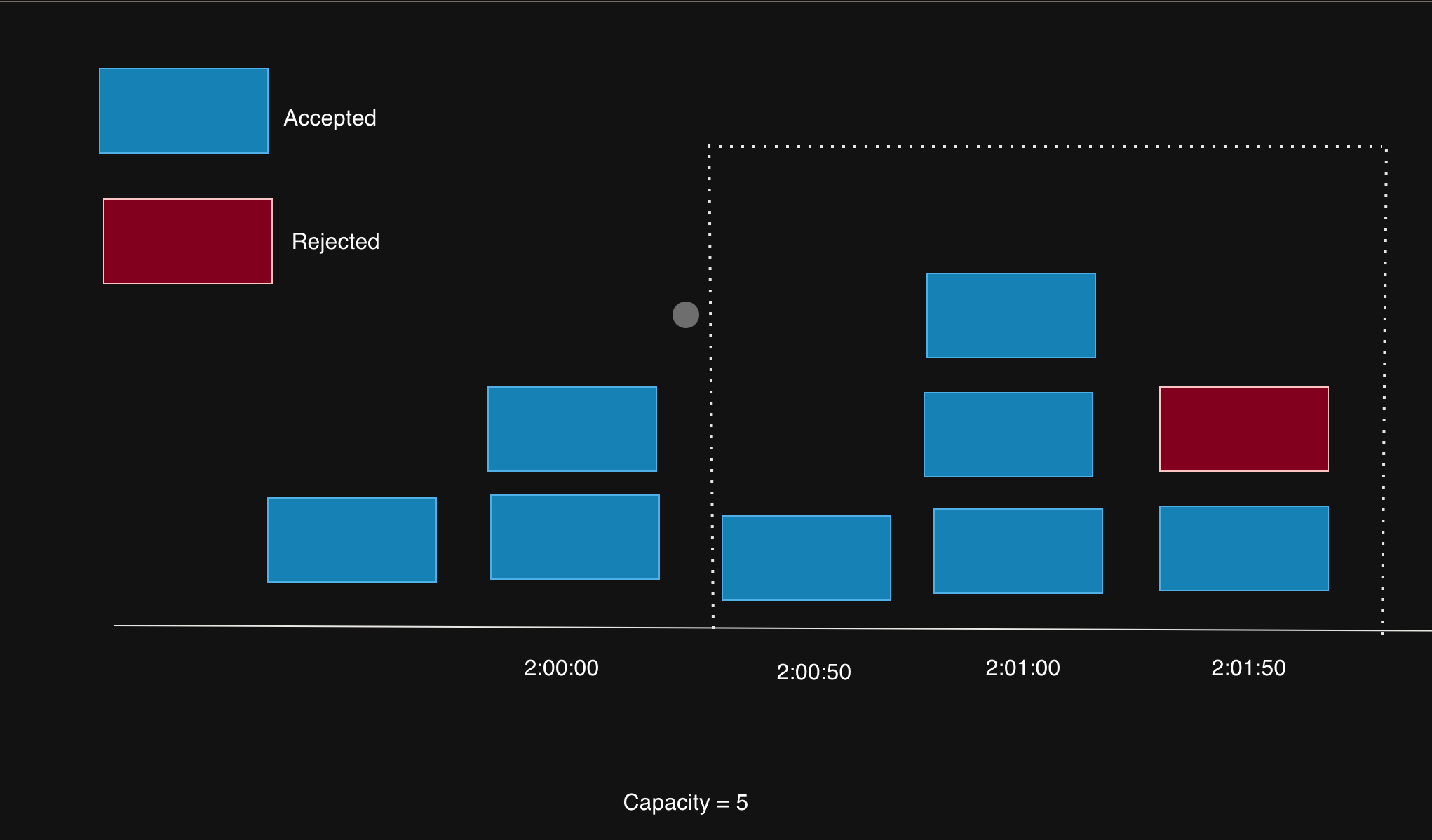

4. Sliding Window Algorithm

Brief Overview

The Sliding Window tracks requests over a rolling time frame, offering precise limiting without boundary spikes.

Step-by-Step Mechanics

- Initialize: Limit (e.g., 1000 req), window (e.g., 1 sec).

- Timestamp Tracking: Log each request’s timestamp.

- Count Requests: Count requests in the last 1 sec from now.

- Decision:

- If count < limit, allow and log.

- If count ≥ limit, reject.

- Cleanup: Remove timestamps older than 1 sec.

When It’s Helpful

- Precise Control: Prevents boundary exploits.

- Use Case: Premium APIs or DDoS protection.

Real Example: Stripe API

- Implementation: Stripe uses a sliding window with timestamps stored in a sorted set (e.g., Redis ZSET). Each request’s timestamp is added, and old ones are pruned, with a count check per request.

- Use Case: Ensures fair usage across paying customers.

Fun Fact

- Smooth Operator: Sliding window’s precision inspired its use in high-frequency trading systems to throttle orders without missing a beat!

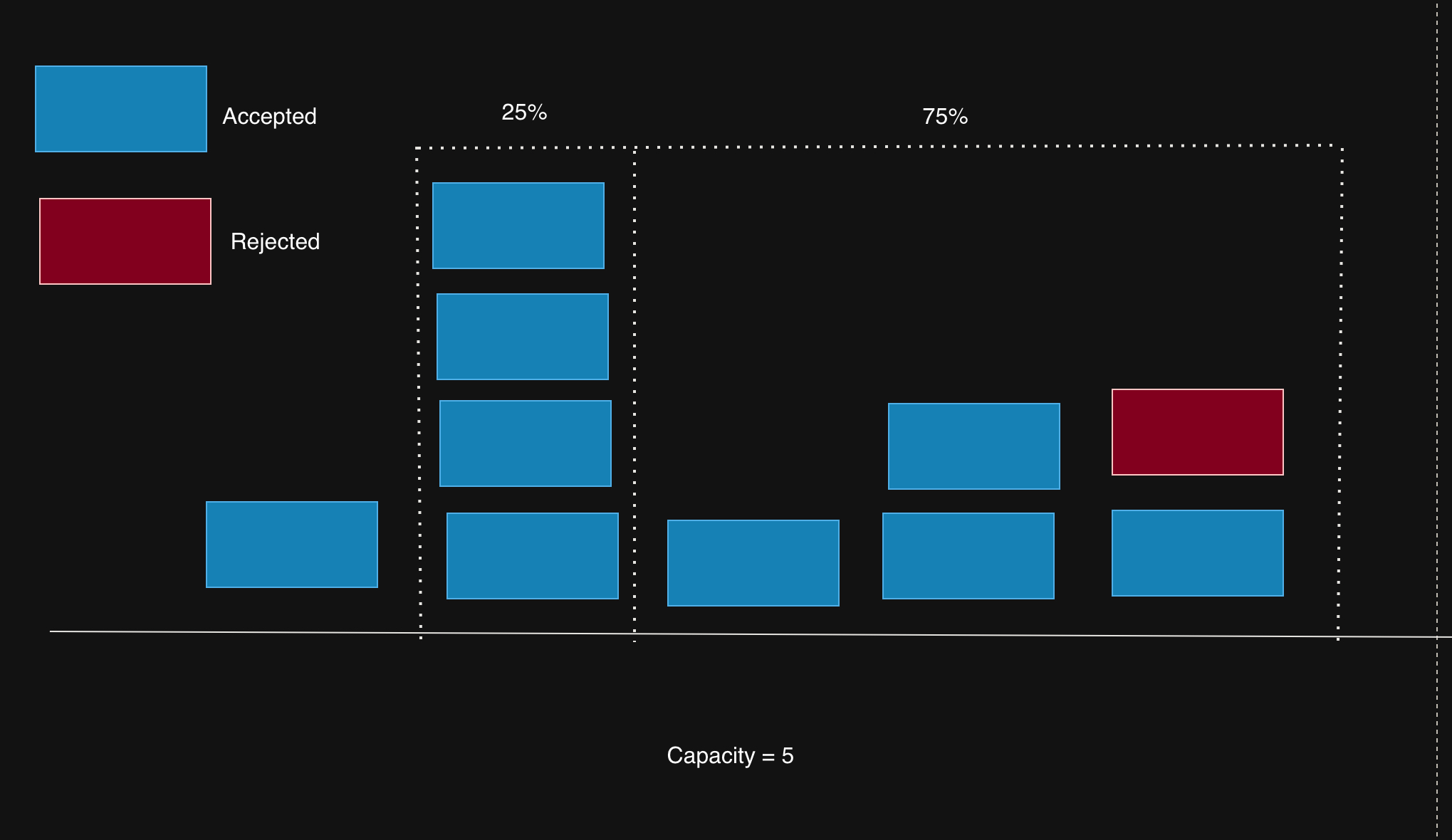

5. Sliding Window Counter Algorithm

Brief Overview

The Sliding Window Counter combines the simplicity of Fixed Window with the smoothness of Sliding Window by dividing time into smaller fixed sub-windows and weighting requests across a rolling period.

Step-by-Step Mechanics

-

Initialize: Limit (e.g., 1000 req), window (e.g., 1 sec), sub-windows (e.g., 2 sub-windows of 0.5 sec each).

-

Count Requests: Track request counts in each sub-window.

-

Sliding Calculation:

- Example: If the sliding window is at 75% of its current window mark, the weighted request count is a combination of the current window’s requests plus a quarter of the previous window’s: weight = (100 - 75)% * previous sub window count + currentWindowRequests.

-

Decision:

- If total < limit, allow and increment current sub-window count.

- If total ≥ limit, reject.

-

Reset: Sub-window counters reset as they age out (e.g., every 0.5 sec).

When It’s Helpful

- Balanced Precision: Smoother than Fixed Window, less resource-intensive than Sliding Window.

- Use Case: APIs needing moderate precision without heavy memory use.

Real Example: Cloudflare

- Implementation: Cloudflare uses a sliding window counter variant for rate limiting. It divides time into sub-windows (e.g., 10 sec window into 1-sec buckets), storing counts in a lightweight in-memory cache (e.g., LRU or Redis). The weighted sum is calculated per request.

- Use Case: Mitigates DDoS attacks by smoothing traffic without storing every timestamp.

Fun Fact

- Hybrid Hero: This algorithm bridges the gap between Fixed and Sliding Window, born from engineers wanting “good enough” precision without the full overhead!

Comparison Table

| Algorithm | Pros | Cons | Best Use Case | Complexity | Real Example |

|---|---|---|---|---|---|

| Token Bucket | Handles bursts, simple | Rejects valid requests if burst exceeds | Bursty APIs | Low | AWS API Gateway |

| Leaky Bucket | Smooths traffic, predictable | Drops excess, no burst support | Traffic shaping | Low | Netflix streaming |

| Fixed Window | Easy, low memory | Boundary spikes, coarse control | Basic rate limiting | Very Low | GitHub API |

| Sliding Window | Precise, no boundary issues | Higher memory/CPU usage | Premium APIs, DDoS defense | Medium | Stripe API |

| Sliding Window Counter | Smooth, moderate resource use | Less precise than Sliding Window | Balanced API limiting | Low-Medium | Cloudflare |

Conclusion

- Token Bucket: Balances bursts and limits.

- Leaky Bucket: Smooths traffic.

- Fixed Window: Simple but flawed at edges.

- Sliding Window: Precise and robust.

- Sliding Window Counter: A practical middle ground with smooth limiting.